Against Humanoid Robots

it's much harder than self-driving

A friend recently proposed, in all seriousness, that we would soon see humanoid robots replacing human labor in domestic jobs. A live-in robot nanny (a la The Jetsons) might take care of your kids, a robot plumber might fix your smart sink, hotels would be staffed by robot maids, all within just ten years!

Now, I have yet to work in this field myself, but I did spend the last few years getting a fancy piece of paper with ‘Data Science’ written on it, and before that I had a healthy interest in the philosophy of action. This proposition seemed so ridiculously optimistic, so unaware of the obstacles to independent machine action, that I made a bet: I am confident there will not be commercially available humanoid robots capable of independently performing simple domestic tasks by July 1 2034 (a little over ten years from the time of the bet). The stakes will remain private but I assure you they are considerable.

This post exists to define the parameters of the bet and explain why I’m not holding my breath for humanoid domestic robotics.

[Note: Just as I was about to publish, this same friend sent me the announcement for the 1X NEO Beta. It doesn’t change my argument, but is interesting enough to discuss further. I’ve now posted an addendum about the NEO and related topics that didn’t make it into this post]

A Commercially Available…

For the purposes of this bet, it is not necessary for the robot to be cheap or in good supply; as improbable as I find the whole affair, an affordable humanoid robot is even less believable.

Let’s use the car market as an analogy: cars come in a very wide range of cost and quality, from old, second-hand lemons going for a few thousand, all the way up to brand-new brand-name luxury vehicles costing millions. Despite that, they’re almost always substantial purchases regardless of the buyer’s wealth; desire for quality, comfort, and status keeps pace with income pretty far up the scale. This is what it means for items like cars to be available, even though they’re not cheap.

In this way and others, humanoid robots will not be like cars. I expect that, if a market for them exists at all, they will still be very expensive, at a price point accessible only to the very wealthy, and supply will be quite low. There will not be a used, second-hand bot market, and the average person will not be able to access one via a pricing plan as they would a car.

Now, I would still count this as ‘commercially available.’ If the Crown Prince of Saudi Arabia has a gem-encrusted android butler, but one cannot be otherwise purchased for love nor money, that does not count. But so long as I can pay, get on a waiting list, and eventually get a robot that someone is already using, that would satisfy this requirement.

It is also not necessary that they be profitable. An Uber-like situation in which continuous losses are offset by an ungodly torrent of VC funds is acceptable for the purposes of the bet.

Humanoid Robot…

A humanoid robot must have a body plan approximating that of a human: a torso supported by two legs, with two arms ending in manipulatory organs, and a distinct head atop the torso. A robot that moves around on tracks or wheels, which does not possess something analogous to human hands, doesn’t have a head, et cetera, does not satisfy this requirement.

I apply these restrictions for two reasons. First, a humanoid robot is desirable because it can navigate environments built for humans without assistance (e.g. stairs) and handle tools intended for human use (everything from a broom to a very small screwdriver, from a microwave to a teakettle) instead of the robot setting requirements for environment and appliances. A humanoid robot which can only sweep the floor if you bought the DomesTech UltraBroom® attachment (sold separately) does not satisfy this requirement, nor does a robot which comes bundled with a ‘smart’ kitchenware set.

Second, a humanoid robot for domestic use must, if its creators want any kind of wide adoption, either cross the uncanny valley or stay on the far side of it. Contemporary robotics solve this by substituting human faces for smooth surfaces and voids, presenting self-consciously mechanical appearances instead of trying to fool the senses. This allows humans to project emotions and humanlike behavior onto them in the same way we do animals, but I expect that a headless robot would be a step too far.

You can do plenty within these parameters. Contemporary humanoid robots have all gone for lots of degrees of freedom and limbs that can move like a contortionist’s. You could make the legs digitigrade instead of plantigrade (though I’m not sure why you would), try for soft, tentacular arms instead of rigid, jointed ones, experiment with Baymax-esque soft exteriors, and so on. None of these would violate the requirement.

Capable of Independently Performing Simple Domestic Tasks…

By which I largely mean cooking and cleaning.

A robot capable of broader maintenance tasks would be both easier and more difficult to create depending on the task: unclogging a bathtub is relatively easy if you have drain snakes on hand, while independently identifying and fixing something like a broken sink is much harder. But we’re not in the robot plumber business. We want to sell people live-in robots, so they have to make themselves useful every day.

Now, the technological obstacles can be split into hardware and software: robotics proper and software concerns like computer vision and translating natural language into embodied action.

I don’t think robotics proper is a substantial issue at this point. That’s not to denigrate the incredible, mind-blowing work that roboticists are doing, far from it. You’ve seen the Atlas demos. While the limitations of rotary motors put robots at a disadvantage in really delicate fine motor tasks, advancements in this field are coming thick and fast. Any quotidian, mechanical action a human can do, I’m pretty confident a robot will be able to pull off in a decade.

I’m not so optimistic about the software angle.

This is the part that gets me weird looks. I’m told that it makes no sense to bet against software, given the immense strides in AI made in recent memory. I’m told that translating natural language into robot action should be easier than, say, a fully autonomous car. I think this is dead wrong.

Let’s return to the car analogy.

There are some reasons to think that a self-driving car should be harder, in software terms, than a humanoid robot. Cars are larger and heavier and designed to move at high speeds in environments in which a miscalculation could result in severe bodily harm or death to its passengers, bystanders, other drivers, or all the above. In order to achieve large-scale adoption, a fully self-driving car would need to be extremely safe and reliable, both for regulatory reasons (consider that US auto regulations are much looser than Europe’s, and we’re still far from meeting them) and for public confidence, both of prospective passengers and especially of pedestrians and other drivers.

On top of that, a self-driving car would need to operate in a wide variety of routes and environments, and especially in adverse weather conditions. It needs to know to slow down (and maybe stop somewhere to get modified) if it’s driving on icy roads, to recognize and obey signage if it’s in an area where things like speed limits haven’t been digitally integrated, avoid pedestrians and animals, and (this is the part that my data ethics course drilled into my skull) has to consider many such variables simultaneously and decide how to compromise on them. The question of how a self-driving car should trade off risks to its passenger vs bystanders and other drivers and how liability works in such a situation remains unclear.

You can mitigate some of these issues by limiting these vehicles to established routes, slower speeds, milder climes, stopping them from operating in adverse weather, or limiting them to roads which have been certified to meet safety standards like paving quality and visibility. But a self-driving car loses value quickly as you add these restrictions, and beyond a certain point you’re inventing a less efficient bus route that your insurance company hates.

A relatively lightweight, slow-moving robot confined to a bounded, domestic space can’t possibly be as difficult, right?

Nope. It can be so, so much harder.

First, let’s point out that while roads and streets can be subject to a great variety of conditions and regulations, they have already been standardized and made legible to make it easier for machines to navigate them. A human driver, in the course of driving, acts like a machine, albeit a rather buggy one prone to disobeying limits and raging against other machines.

A home, a place where people actually live, is not standardized, and is often the exact opposite of legible.

A machine imitating a human driver is taking in a great deal of local information, but has only a small range of outputs. Accelerate, decelerate, brake, steer. If you’re feeling fancy, it might have independent control of headlights, signals, and windshield wipers. These outputs must be combined in sophisticated ways to achieve its goals, like parallel parking or not running over a child, but the actual space of a self-driving car’s possible actions in any instance is still quite constrained, and it assumes an environment which, though it might be damaged or modified by local conditions, is still designed to be navigated by a being like itself.

Further, a road is a transitory space. Once the car has moved through it, it loses relevance. The car does not need to remember the state of this stretch of road the last time it moved through it. Any input which does not resolve into a weather condition, an obstacle, or another car can be safely ignored. Even so, this remains an extremely difficult problem which has not been solved, and even optimistic projections put full self-driving at least half a decade out.

The space of possible actions for a humanoid robot at any moment is much larger than a car’s, both in the shallow sense that humanoid robots boast many more degrees of freedom (the Unitree G1 has 23, while the Boston Dynamics Atlas boasts 28), and in the more meaningful sense that, unlike a car which is expected to start in one place and end in another, the domestic robot must modify its environment in order to achieve its goals, and must use much more sophisticated and path-dependent combinations of outputs. Relevant inputs are also far more varied and numerous, for reasons we’ll see shortly.

But let’s take this from the abstract to the concrete with a simple example.

Meet Andrew

In the distant future of the year 2034, I roll out of bed in my DomesTech SmartHome® (tagline: Home is where the Smart is) and groggily tell my live-in domestic robot, Andrew, to make me my typical breakfast: eggs, chistorra, and a glass of cold milk.

It steps off its charging port, I step into the shower, and the clock starts ticking. Andrew must… well, that’s the first problem.

We’ll assume away the serious, but less interesting, issue that ‘make me my usual breakfast’ is an instruction dripping with context: it’s morning, I want to eat at a table with plates and cutlery after showering and before leaving for work. There is a time limit here. There are also implicit commands I don’t state outright: I want Andrew to clean the table, the kitchenware, and the kitchen itself after I’m done, assess how much of the relevant ingredients remain, and add them to the grocery list if they are close to running out.

Let’s assume Andrew already knows all of this: it’s been with me for a year (I lost the bet a while ago and have long since surrendered to the new tomorrow), it knows how I like my eggs, it knows how the kitchen is laid out, et cetera.

The clock is ticking, Andrew has its instructions, and… I have misplaced the eggs.

See, I had a bit of a bender last night and, for reasons I can’t remember and Andrew can’t begin to comprehend, moved the egg carton out of its usual spot in the fridge and into the cupboard under the sink. This is totally irrational behavior and Andrew now has no better option than to search through the kitchen and hope they turn up in time. Worse, during last night’s bender, I wound up spilling some wine all over the carpet, which Andrew only became aware of when I activated it just now.

The resulting situation disrupts anything like a regular, routine plan of action. The clock is ticking. Should Andrew take the time to search for the eggs (and if so, how does it carry out such a search?) or try to get a new carton? If it chooses to search, when does it stop? It needs to not only understand its environment well enough to know where something should be, but where it might have gone if it is not in its intended place.

Should it leave the carpet be for the moment, prioritizing breakfast, or try cleaning the carpet now, or rolling it up and storing it? Given our previous handwaving, it is conceivable that the robot has already developed a sense of how I would prefer it to act, whether by observing me over the past year (implicit) or by defining such preferences during installation (explicit). But it needs to develop such a model; it cannot just be an unsophisticated action-doer, it needs to be a contextual agent.

Does Andrew know where the hydrogen peroxide is, or where it would make sense to temporarily store a dirty carpet? Its model of the home must include not only present objects, but also voids, places which are presently empty but where things could go.

Because these are literally everyday tasks that humans can perform with very little effort, we tend to underestimate how complex they actually are and how our ability to perform them reflects profound models of our environment, models which we are far from instantiating in machines. For further discussion, see David Chapman on dancing robots.1

These may seem like pointless contrivances, but I assure you they are not. Even if the robot can translate natural language into action, this is insufficient. It must not only create and maintain a full model of my home, complete with an understanding of each object within it and the purposes to which those objects can be put, even as some objects are transformed, but it must also act in accordance with a model of my needs and desires (and, almost certainly, some model of ethics and law) and take actions I did not even implicitly state in order to fulfill them. Worse, it must integrate its model of my desires with its environmental model and be on constant alert for deviations, such as wine stains on the carpet.

A home is not a factory. It is not a regulated, standardized environment meant to facilitate specialized labor. It is a complex, chaotic environment in which the desires arising from human living are latent, and taking action within that space requires skills far, far greater than the mechanical ability to fry some sausages, crack and whisk an egg, add salt, scramble with sausages, cook until golden-brown, and serve with a glass of cold milk. I am very particular about my breakfast, but I am more particular about my home. One of those particularities is that my home is a place to live in, and not a place which is designed to facilitate machine action. I’m not doing Andrew any favors here, and neither will any real living space into which it might be deployed.

Now, you may protest that I am asking too much. All this abstract stuff about plans and cognition is irrelevant: you want a robot that can whip up an omelet on short notice, the rest you can take or leave.

And if that is really, truly, all you want, I have good news. Your omelet-making robot is already a reality.

What? Oh, that wasn’t what you meant? Apologies, I forgot to develop a complex model of your desires informed by—but independent of—your explicit statements and instead just ran a search for ‘omelet-making robot’. Is there anything else I can help you with?

Another breed of non-technical techbro will protest that I’m still making things way too complicated. You just need to train the robot to perform a wide variety of simple tasks, pip3 install omelet, set out the ingredients and tools for it, and watch it go.

I agree that such a robot would be much, much easier to create. It may even be possible within a decade, for reasons I will explore below. But before you endorse this approach, I want you to stop imagining cool robots, and start imagining what it would be like to actually live with and use something like this.

Imagine, when you want an omelet, pulling out all the ingredients and kitchenware, measuring them out in the desired quantities, placing them in the right configuration, calling the robot over like a dog, telling it to make an omelet, and watching it go. That’ll be pretty cool the first time. Still pretty cool the second. But once the novelty wears off, you will realize that you have spent money for the privilege of micromanaging a tool instead of doing things yourself.

A convenience you have to micromanage is no convenience, and such a robot fails to replace human domestic labor, since humans are not only able but expected to consider their entire context to proactively work toward a goal. Any human this helpless and stupid would be fired immediately for sheer incompetence; this is not a sufficient standard for our domestic robot. You might still cover it in diamonds and sell it to a Saudi prince, but you’d have to bundle it with a full-time bot-wrangler, which kills the mystique.

All of the above is necessary because, unlike with the past mechanization of labor, which first reduced a given task to a mechanical process for increased efficiency and then substituted humans for machines specialized in those now-mechanical processes, this push for domestic robots is skipping step 1. We’re now trying to substitute machines for humans on human terms, in a domain which has not yet been (and perhaps cannot be) mechanized.

In other words, domestic robots have to compete with human domestic labor, and human domestic labor is long on things like improvisation, social navigation, and things that get bundled together as ‘soft skills.’ On top of that, domestic service workers already get mistreated and they have, you know, human rights and faces. The domestic robot, on the other hand, is not an ethical subject. How will our target customer react to a glorified roomba messing up their dinner? Would they even be able to relax around such a thing, or will they constantly be watching it in case it screws up somehow?

As I keep repeating, a home is not a factory. People do not want to live in places that become like factories (cf the late James C. Scott’s Seeing Like a State and Le Corbusier’s various atrocities against urban planning), and your main target demographic for this product are wealthy people with big houses who already have domestic servants and want to replace them with robots, probably because they live in a subculture where anything ‘techy’ is a status symbol. That is a demo that likes the finer things in life, possesses the resources to obtain them, and (certain Silicon Valley subcultures notwithstanding) won’t pay you for the privilege of making their homes less livable.

Yet More Problems

I will point out once again that the above example is simple. A home which is almost entirely clean and orderly except for one (1) mess and a misplaced egg carton, with only one human resident, subject to a very stable daily routine.

Imagine, now, the unbearable complexity of two people. Two people with different schedules, habits, needs, and desires. Now add houseguests, children, extended family, and pets, possibly all at the same time. In order to effectively act within this environment, the robot must now consider the social environment beyond the needs and desires of a single person. This is, of course, something humans working these jobs already do; along with opposable thumbs and long distance running, social modeling is our thing, something we almost never think about explicitly. In other words, we take it for granted. Andrew has a high bar to clear.

The robot has two options. The first is to model the needs and desires of the house as a whole, a sort of shifting gestalt entity. The second is to give someone admin permissions, including the ability to cancel the instructions of others and restrict whose instructions it obeys.

If you choose the latter option, which is almost certainly the more realistic one, you quickly discover that blame for the robot’s decisions swiftly falls on the admin, and though you can choose to be permissive or strict, there’s no position which is free of abuse. Imagine telling the robot to stop serving whiskey to your drunk uncle at Thanksgiving. Imagine one of your kids telling the robot to take your other kid’s homework and throw it in the trash. Imagine domestic arguments you’ve had with loved ones or roommates, and imagine if, the whole time, there was a robot in the corner desperately trying to figure out whose instructions it should follow first, like a child of impending divorce vacillating between mommy and daddy.

The former option is less plausible, but so much more fascinating. It’s the kind of problem I would love to work on professionally, just for the philosophical implications. You’d be giving a machine a measure of co-equality, the duty to judge and make decisions based on the Good of a shifting group of human beings. I wonder what Andrew thinks about Aristotle?

In either case, the problem of operating a domestic robot is irreducible from the problem of getting along with other humans, and you, dear reader, know exactly how difficult that can be.

There’s another part of this I should address before we move on. You may be objecting to my assumption that ‘modeling and acting on a person’s needs and desires’ is some kind of really hard problem. After all, anticipating your desires is what data-driven algorithms already do in entertainment, and it won’t be long until digital helpers, like the Alexa, track and maintain quotidian needs and your fridge can place an order to refill itself when it runs out of stuff. This is a non-issue!

For the sake of argument, let’s bulldoze right over the colossal distinction between ‘predict which 20-second video will keep you doomscrolling’ or ‘knows to order another carton of milk’ vs caring for your needs in real life, and just assume it can do that anyway.

That alone is not sufficient. It’s not enough to just anticipate and address your needs and desires, it also needs to do so in a way that is human-legible. That’s academic-speak for that robot had damn well better be able to explain itself.

As it stands, machine interpretability is a big problem in generative AI. Short version, we built it, but we don’t know how it went from a given input to a given output, and we don’t have the ability to trace and interrogate any single decision it makes. No, Aaron, not even in principle. Now, there have been recent breakthroughs in this field, such as Anthropic’s ability to explicate a given Claude node (cf Golden Gate Claude), but that’s still a long ways from being able to accurately explain why it made a complex decision in a way that will persuade a human being that the decision was both benign and correct.

These are not problems we can solve readily in robots for the simple reason that we’ve been trying to solve them in humans since the Holocene. We do not have an objective and thorough account of our own needs or the reasoning for our own decisions (cf Elephant in the Brain and the entire field of psychology). Caring for the needs of Others, Others whom we know intimately and love, is still not easy for us! We’re not solving this in the next decade, but I positively live for the day a paying customer sues a robotics company because a robot told them ‘No.’

In short, equipping a robot to independently perform simple domestic tasks requires solving the central unsolved problem of machine vision, plus large swathes of psychology and phenomenology, not to mention actually complying with regulation and bringing this technological terror to market, and we have to do it all…

By 2034

Let’s take a peek at the state of the art in robotics for a second.

The humanoid robotics space is fairly diverse, with companies like Aldebaran making friendly, almost toy-like robots designed (nowadays, mainly) for use in healthcare and education, and Hanson working to create robots that look human and can hold a conversation. These are not the kinds of robots we’re interested in. A domestic robot doesn’t have to pass as human (it’s almost certainly better that it doesn’t) and needs to work out of the box, not be a programming platform.

The robotics companies that come to mind in this domain are Boston Dynamics with the Atlas (as well as other models testing capabilities like navigation and fine manipulation), Tesla with the Optimus, and Unitree with the G1.

You’ve almost certainly heard of the first two, but Unitree is more obscure, a Chinese company that released the humanoid G1 only recently. I include it because, while writing this article, the aforementioned friend linked me to it and announced he had won our bet less than a year in. We’ll see how that holds up.

In terms of hardware, these three robots are converging on broadly similar approaches. These are all humanoid frames of the kind we’ve described earlier, which navigate their environment first and foremost by vision. The Atlas and G1 both feature a combination of depth-perceiving cameras and lasers for rangefinding. The Optimus, like Tesla’s cars, uses exclusively depth-cameras with no LiDAR or other form of visual mapping. I’m not clear on why, but it seems to be company dogma.

In terms of mobility, the Atlas is by far the most advanced, with the ability to run and jump, while the Optimus and G1 are more inclined to toddle around. However, this is not a huge advantage for Atlas in the domestic domain, since domestic robots will not often need to backflip.

Both the Atlas and Optimus are capable of doing some basic tasks like staying upright, navigating an environment autonomously, and some variety of autonomous sorting tasks: identifying discrete objects in its environment and moving them to appropriate locations. This kind of functionality is the foundation of all the more complex behaviors a domestic robot will need. Information on the G1’s capabilities is less available, and I have not seen any footage demonstrating its ability to sort or perform similar behavior. I take this to mean it does not have this ability.

Through all this discussion of specs and capabilities, you will note one thing: none of these companies even claim to perform any of the advanced autonomous processes a domestic robot requires. Translating natural language into machine action, assigning purposes to discrete objects, selecting tools and resources for a task, none of this is on the horizon. When Tesla’s Musk claims the Optimus is performing (unspecified) autonomous tasks in (unspecified) factories, this should be interpreted in the narrowest way possible.

If any of these companies had gotten anywhere close to any of the autonomous processes listed above, they would be demonstrating it on stage, not relegating their wundertech to shadowy production lines. If you don’t see it, it’s not there, period.

And Yet…

It may surprise you to hear that I don’t think a domestic humanoid robot is impossible to build over the next decade.

In spite of the immense difficulties facing such a project, many of them potentially insoluble philosophical problems, it’s not inconceivable that we might just… slide past.

Five years ago, GPT-2 was hallucinating and stringing together dream sequences. Five years before that, the stunning, cutting edge of human-machine interaction was the Alexa. Youngsters reading this may not remember, but Siri freaked people out back in the day. It, and all its ancestors going back to ELIZA, operated according to formal systems, increasingly complex linkages of keywords to vast banks of responses, at first predetermined, later integrating search engines.

Starting with GPT, the new wave of text generation operated on a totally different principle. I’m fond of Sam Kriss’ description of this same shift in translation software:

These systems are contextual and vast, synthetic rather than analytic. Their elements appear in no particular order; what matters is the shifting network of interrelationships between them. They’re entirely comfortable with redundancy, duplication, arbitrariness, ambiguity, isomorphy, pleonasm, and polyvalence.

Where the older, analytical systems were designed, the new, synthetic models are raised. There’s plenty of design going on around them, but the core of these systems involves feeding a carefully processed gob of text to a machine meant to imitate the structure of a human brain, and something approximating intelligence comes out the other end. You get the input and the output, but everything in between is a black box that resists insight. And, while I don’t want to in any way denigrate the genius advances made by professionals in this field, the growth of these models is mainly driven not by deliberate design choices but by feeding them more information, faster.

The big money in robotics is currently moving in the same direction. The Unitree G1, for example, is currently on sale (at least, it’s supposed to be, it’s out of stock) but it transparently is not meant for domestic or workplace use. It’s a development platform. Though Unitree isn’t exactly forthcoming about the details, training almost certainly involves a combination of natural language prompts, sensory data, and teleoperation. You give a human operator an instruction, which they accomplish by remotely controlling the robot, and then that instruction, plus the sensory information and movements the robot recorded, are saved to a vast bank of data which is later used to train a model. The goal of such a model would be to generalize beyond its training data such that, given a natural language instruction, it can accomplish that instruction independently in a novel environment. Tesla is currently doing something similar with Optimus, (Note: I found out about this after writing the above paragraph) recruiting ‘Data Collectors’ to perform basic mechanical tasks while hooked up to motion capture suits.

This is the same general principle as training an LLM, with two huge differences.

First, motion data is probably fairly sparse (that’s my assumption, having not worked in this field before), but text data is very, very dense compared to visual sensor data. A single frame of HD video is probably on the order of 30kb after compression: about as much text as a short essay. As when training image and video generation models, this massively raises the costs of storing the data and training the model itself.

Second, text data is extremely cheap and easily available, as is image and video (assuming, of course, that you’re just scraping the web and not bothering to get permission to use any of it). Neither the sensory or motion data these robot models need is already available: the robotics company will have to produce all of it, and changing things like the technical specifications of the robot’s sensors or body will lead to generalizability issues in future models.

Because of this, we should expect the training process for these kinds of models to be far, far slower than what we expect from LLMs, and much more expensive, with actual data collection taking big bites out of your schedule and budget, never mind how actual production of these robots as training platforms forms a bottleneck.

At the same time, we might expect the computational side of the training process to be faster than we might anticipate, both because of continued improvements in computation and the possibility that related fields make breakthroughs in the meantime. If the accelerated schedule to AGI proposed in the Aschenbrenner manifesto comes to pass, it’s not inconceivable that the efficiency of machine learning across a wide variety of domains could become far more efficient.

But even these possible improvements might be undermined by the necessary scale. As of writing, the largest companies in the AI space are racing to monopolize vast quantities of compute and energy in anticipation of the exponential training requirements of next-gen AI. On top of that, we also need to consider the possibility that these kinds of embodied tasks, never mind generalizations to contextual behavior, are far, far more complex than text generation is, such that they just plain require more training than a language model. There may just not be enough compute available to train a robot this advanced, never mind sharing those resources with the chatbots.

There’s still room for ingenious wizardry: fitting humans with wearables to gather sensory and movement data in daily life, for example, could move the schedule up a fair bit.

But not to within a decade.

For context, let’s come back to the car analogy for the third and final time.

Self-driving is one of those technologies that has been ‘just around the corner’ for a while now. Tesla, for example, claimed self driving already worked in 2016. In 2017, it claimed the feature would be ready in 2018. In 2019, it would be ready by 2020. In 2020, by 2021. In 2023, a beta was released in North America, and swiftly recalled. As of writing, the median Manifold bet points to Level-5 self-driving by 2029.

Considering everything we’ve established above: how the training data is in much lower supply and far more expensive to gather compared to sticking cameras on a car and gathering telemetry, how the outputs are far more numerous, complex, and sensitive than they are for a driving machine, how it must navigate an environment which is neither legible nor standardized, how an effective humanoid robot would need to do things like explain itself to human users, integrate detailed local models into decision-making, and integrate social environments into decision making, do you seriously believe we will get domestic robotics ~5 years after we maybe expect to get self-driving cars? When the global market value of autonomous vehicles is credibly two orders of magnitude greater than for humanoid robots, domestic or otherwise (1, 2)?

Self-driving cars are hard. We should anticipate that humanoid robots of the kind we have discussed here will be far, far harder. They will require, at minimum, much more funding and truly massive data-gathering initiatives. Unitree using its robots to train what I can only assume is an exclusively internal model is not a serious approach to solving this problem in a short time frame. Tesla recruiting ‘dozens’ of people to dance the robot is not a serious approach to solving this problem in a timely manner. Boston Dynamics, as always, combines minimal hype with sensible public-facing attempts to solve these issues one at a time, and godspeed to them.

I expect that, if you took some engineers from any robotics company and got them drunk enough to violate their NDAs, they’d agree that these kinds of time horizons are way too short, no matter what their leadership is telling tech reporters. It’s hype for unproven technology, motivated by a need to bump share prices (Tesla) or absorb funding from a government hungry for tech solutions (Unitree).

So from here on out, I expect anyone claiming there will be humanoid robots that can independently cook and clean within a decade is either:

a) in possession of profound domain expertise and secret knowledge about how the field will evolve, or

b) fundamentally unserious and uninformed

and I don’t expect to be hearing from robotics wizards anytime soon.

With all that said, we have finally come to the end of- OH COME ON!

The Big Stinkin’ Problem

I’ve leveled so many objections to the domestic robot here that I almost feel bad including this one. It’s not one of my core objections, and I won’t disqualify any humanoids for not including it, but I feel that proponents of domestic robotics have indulged in a total failure of imagination.

Quick question: would you hire a human being for domestic work, mainly cooking and cleaning, if they had no sense of smell or taste?

When I ask this question, I usually hear edge cases. A cook without smell or taste might, hypothetically, still be able to do their job without taste just through their experience with ingredients, a sort of culinary Beethoven. It would still be possible to clean without a sense of smell by paying close attention, though plenty of problems would be detectable only by scent. But you, or rather our hypothetical customer, almost certainly would not.

We don’t think about this too much because taste and smell are not our primary senses for exploring the world, though the spread of temporary or permanent anosmia from Covid has gotten more attention to the field. As a congenital condition, anosmia is also much, much rarer than blindness or deafness. According to the Cleveland Clinic, around 1000 Americans suffer from congenital anosmia. Not 1 in 1000, 1000 total, compared to over a million congenitally blind. It’s not a disability that gets a lot of attention or accommodation.

But despite smell and taste being less directly involved in one’s day to day, consider how many quotidian tasks would be much harder without them, even beside the reduction in quality of life. Learning to cook would be very difficult. Breakdowns in plumbing would pass you by for longer than most others. You would struggle to identify putrid foods. You’d always be paranoid about how others perceived your own scent.

Now consider the field of machine olfaction. In particular, and I mean no disrespect to the professionals working on such problems, it barely exists.

Despite not being our primary choice of sense and being less sensitive than that of other animals, human olfaction is very complex in a way that has proved very resistant to mechanization, not least because achieving anything resembling human olfaction would require the ability to detect and identify an implausible number of distinct molecules. Even limiting ourselves to a smaller range of more common or important scents still pushes sensor technology well beyond current limits.

Taste is very closely related, in that a taste judgment crosses over heavily with the scent of the same item, and in that the fundamental problem is similar, involving detection and identification of molecules in a liquid solution rather than in air. As far as I can tell, a distinct field of machine taste doesn’t exist at all, and the possibility of a machine trying to determine if something tastes ‘good’ is totally unexplored.

This is the kind of problem most people won’t even consider until their domestic robot fails to realize their dog fouled the carpet or they get food poisoning from a robot-cooked meal using bad ingredients. It places so many limitations on the ability of such a domestic robot to act without supervision that customers will end up tearing their hair out. You might get androids replacing factory workers and self-driving cars replacing taxis, but you simply will not replace human domestic labor without, at minimum, a half-decent imitation of human olfaction. At this stage, that might be one of the bigger challenges I’ve presented here.

So yeah. I’m not holding my breath for domestic robotics. If you find my judgment offensive, I am always open to making more bets and taking your money.

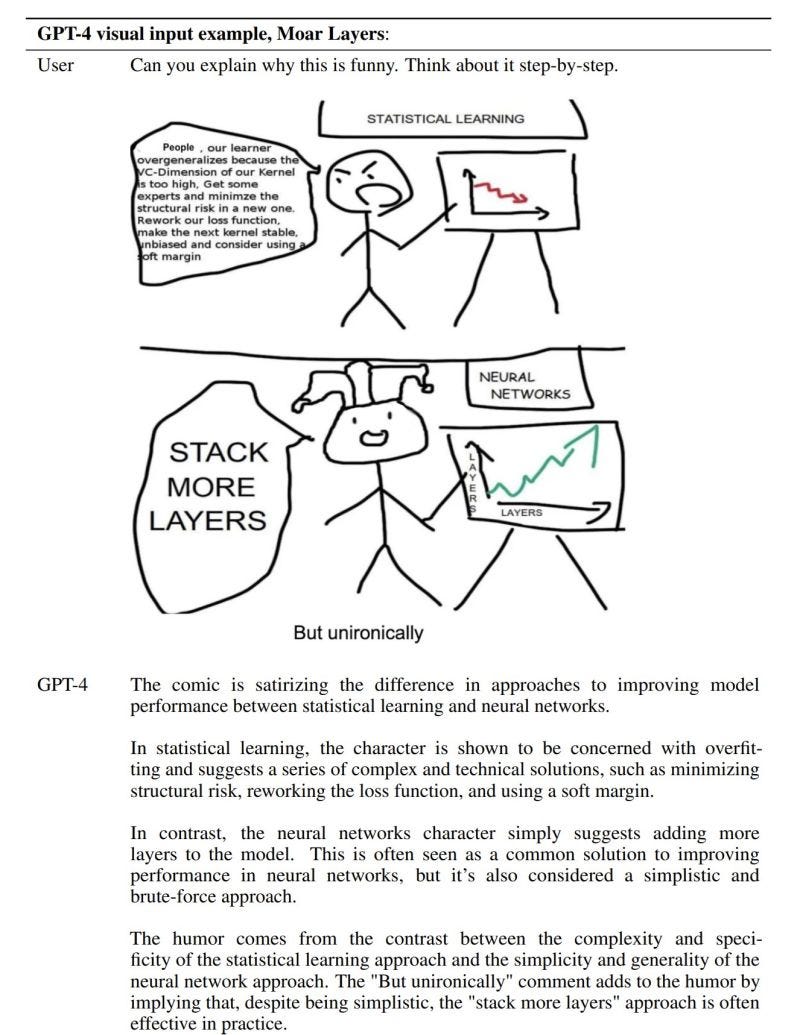

I think you are right that humanoid robots won't be common in 10 years, but you are wrong in your comments about the software problem. I asked Claude about the situation:

"Suppose a household robot who is supposed to maintain the house and cook breakfast discovers that

1. The eggs are not where they are supposed to be in the fridge

2. There is spilled wine on the carpet."

This was its reply:

"This scenario presents an interesting challenge for a household robot. Let's consider how it might approach these two unexpected situations:

Missing eggs:

The robot would likely:

Check other areas of the fridge in case the eggs were misplaced

Look in other possible storage locations (pantry, countertop)

Add eggs to a shopping list or alert the homeowners about the missing item

Consider alternatives for breakfast that don't require eggs

Spilled wine on the carpet:

The robot would prioritize this issue as it requires immediate attention to prevent staining. It would:

Assess the extent of the spill

Select appropriate cleaning materials (e.g., paper towels, carpet cleaner)

Begin the cleaning process:

a) Blot the excess liquid

b) Apply a cleaning solution

c) Continue blotting until the stain is removed

Use a fan or other method to dry the area

Mark the area for a deep clean later if necessary

The robot would need to prioritize these tasks. Cleaning the wine spill would likely take precedence over the breakfast preparation due to the time-sensitive nature of preventing a permanent stain.

Would you like me to elaborate on any aspect of how the robot might handle these situations?"

I feel like this is a reasonable response. The robot might not find the eggs, it might not do the same job you would have done at cleaning up the wine, but its responses are within the range of what I would expect a human household servant to do. Before 2018, you would have been totally right-- we had no idea how to do that. But LLMs now understand what humans would want, in the sense of understand that is important here. That's no longer the sticking point.